Watch The Video

Watch on YoutubeOR ON DJANGOTV: A(i) Modest Proposal on DjangoTV

Abstraction

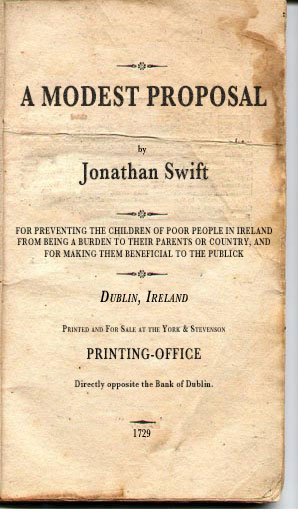

In 1729, Jonathan Swift presented an elegant and well thought-out solution to the problem of homelessness and poverty plaguing Ireland. He argued that his solution would result in a much more productive and fulfilling society. While not many could digest the meat of his proposal, it was still effective in exposing a widening gap of social inequality and abuse.

Is it possible that Swift's proposal can be applied within our technosphere? Did he provide us with a recipe for success, or at least little nuggets of wisdom to chew on?

Many attending this conference may be keenly aware of the dichotomy between open source and big tech. There is a relationship that always seems to be at risk of imploding. Should we be encouraging new (and existing) engineers to participate in open source, in spite of this tenuous relationship? If this topic whets your appetite, you'll definitely want to come to see what's cooking.

Before I Get Into It

It's not a stretch to say that this was one of my most difficult talks to make. Not necessarily in the sense that it was hard to research or to write, per se.

But rather, there was an over-abundance of information—an embarrassment of riches as far as sources go. I was adding and subtracting information even hours before my talk was due to start.

It was also hard because I was attempting to do something kind of different.

Hearkening back to my college days, I employed a pastiche of Jonathan Swift's A Modest Proposal as a backdrop to my talk.

Well, backdrop is not really accurate. It comprised half of the time allotted.

If you'd like to read the original A Modest Proposal, it is available through project Gutenberg.

This was a big risk. But I felt like holding on to my retelling was worth it.

I was, in a way, demonstrating the absurdity of the times we are living in—where LLM models synthesize text that has been gobbled up in gluttonous fashion into sentences and paragraphs than are meant to provide meaning.

Wasn't I doing the same thing? Wasn't I just copying the words of a literary giant and remixing them for the modern day? Is that plagiarism?

Regardless of what the answers are to those questions, the one key difference for me is in intent.

LLMs have no intent. They don't mean to say anything, because they are statistical averaging algorithms and nothing more. They have no sense of satire, irony, or absurdity—they find absolutely no pleasure in the usage of an Em Dash.

Of course, statistical averaging algorithm is a terrible marketing term, so hence, "artificial intelligence" it is.

The anthropomorphism is no mistake. It is a cynical and clever guise to obfuscate the nature of the technology, providing cover for the cruel, self-serving oligarchs that stand to benefit from the unsuspecting masses.

I'm listing here some of the references made during the talk, and maybe a few more for good measure. But you're bound to find a plethora of additional data that probably materialized within the time it took you to read this.

Notes

My pastiche of A Modest Proposal is available on my blog.

We're now living in a current tech landscape that favors wealthy venture capitalists and ultra wealthy CEOs. Their worldview is intoxicated with visions of unbridled and unregulated technological growth, no matter what it costs.

Tech CEOs and venture capitalist are leading the charge with AI technology.

They want unregulated technological growth at all costs.

Two quick quotes just to demonstrate:

We should raise everyone to the energy consumption level we have, then increase our energy 1,000x, then raise everyone else's energy 1,000x as well.... We believe we should place intelligence and energy in a positive feedback loop, and drive them both to infinity.

—Andreessen Horowitz, Techno-Optimist Manifesto

We don't actually have that much time. So what can you do? Well, you can have a life of stasis, where you cap how much energy we get to use... Stasis would be very bad I think... But the solar system can easily support a trillion humans.

—Jeff Bezos, Business Insider

Longtermism

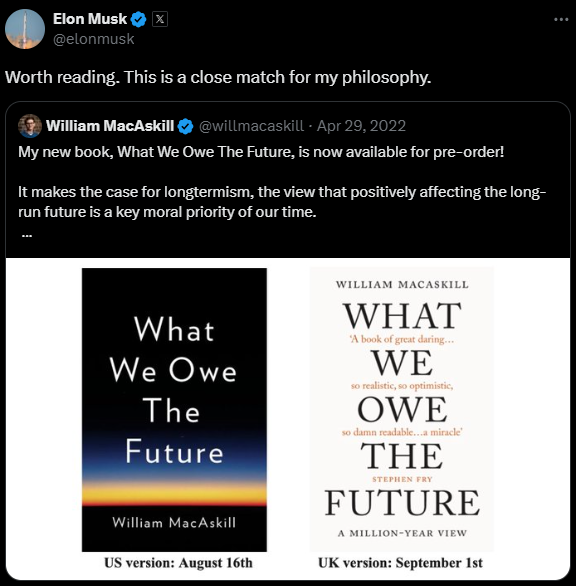

Much of this stems from a book by William MaCaskill called "What We Owe the Future" where he describes the philosophy of Longtermism.

In short, optimize technological development today for the "good" of generations that will come after us.

Many CEOs and VC investors subscribe to this type of thinking.

Boosters and Doomers

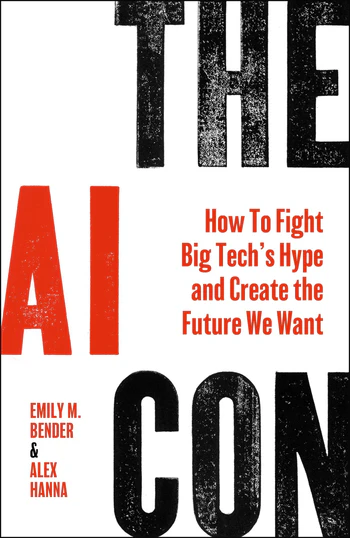

The AI Con by Dr Emily Bender and Dr Alex Hannah, they also contextualize the hype around AI technology from two groups, Boosters and Doomers.

Boosters imagine a world where AGI (Artificial General Intelligence) will be smart enough to cure cancer, create unending economic growth, and allow humans to live forever.

Doomers imagine a world where AGI becomes sentient and decides that what is best for this planet is to eliminate humans.

Both of those scenarios are designed to distract from real harms caused by current technological advances, and to place power in the hands of those who would prospectively "control" AGI.

TESCREAL

Many of the viewpoints by these powerful billionaires, executives, and venture capitalists can be summed up with the neologism of TESCREAL. It stands for:

- Transhumanism

- Extropianism

- Singularitarianism

- Cosmism

- Rationalists

- Effective Altruism

- Longtermism

Compute

Value is being referenced in terms of compute.

I wonder if the future looks something more like universal basic compute than universal basic income.

—Sam Altman, All In Podcast (ep. 178)

In August, Fortune ran an article on Sam Altman admitting that OpenAI "totally screwed up" the launch of GPT-5, and in the same breath, saying that the company plans to spend trillions of dollars on data centers.

How much is a trillion? Here's a sense of scale.

Sense of scale:

- 1 thousand seconds = ~17 minutes

- 1 million seconds = 11.5 days

- 1 billion seconds = ~32 years

- 1 trillion seconds = 31,710 years

So, spending trillions of dollars is almost incomprehensible. Who's going to pay for that?

Sam Altman was asked the same question in a room full of investors. He told them that he didn't know how to generate a return for them, but once AGI is online, it would figure it out for them.

Motivations

There are countless other examples of nearly all the leaders and executives from OpenAI, Anthropic, Google, Microsoft, Amazon, xAI, as well as lesser known yet notable entities that hold these kinds of views and are proactively working to accelerate their pace. Venture capitalists, whether true believers or not, are buying in.

For more context, you can read Adam Becker's book titled "More Everything Forever" which examines these characters and their motivations. (Spoiler alert: It's in the title.)

Drawing a line between TESCREAL, AGI, and eugenics is frighteningly easy.

The term, TESCREAL, was coined in a paper released on First Monday last year.

https://www.dair-institute.org/projects/tescreal/

many of the very same discriminatory attitudes that animated eugenicists in the past (e.g., racism, xenophobia, classism, ableism, and sexism) remain widespread within the movement to build AGI, resulting in systems that harm marginalized groups and centralize power, while using the language of “safety” and “benefiting humanity” to evade accountability.

—Timnit Gebru, and Emile P. Torres, The TESCREAL bundle: Eugenics and the promise of utopia through artificial general intelligence

Number Go Up

In July 18, 2025, economist Matt Stoller wrote in his newsletter a post titled "The Number Go Up Rule: Why America Refuses to Fix Anything".

From the article:

A society under 'number go up' tends towards evil. The men and women behind an excessively high rate of return often make deeply sinful and immoral choices, little different than the Confederate plantation owners did in whipping slaves or lords in castles did when abusing serfs.

From the talk:

"Unregulated, unchecked, and unopposed, tech executives and venture capitalists just want that number to keep going up... their valuations are astronomical... their user growth is unprecedented

There is no end in sight and they will stop at nothing."

Goes Without Saying

There are MANY concerns which I didn't spend a lot of time highlighting.

Before listing some of those concerns here, I want to point out that we live in polarizing times.

It can be enticing to take these points as de facto argument stoppers, negating the need for nuanced discussions on how best to move forward.

For example, AI-detractors can point out to the projected, massive environmental impact of datacenters and compute necessary to prop up the industry. But this also sidesteps the already massive amount of datacenters necessary to provide entertainment services, such as streaming video and other data-intensive tasks.

My main hope here is not to use these points as argument stoppers. Instead, these should open up discussion and real thought into how we could correctly asses these scenarios and mitigate any dangers.

Or should we ignore them?

In no particular order, here are some harms pointed out in my talk in a quick, spitfire way:

Massive data theft by AI companies, and the subsequent disregard and destruction of economies around artists, creators, and other content producers (including local beat reporters). See:

Carbon footprint of these technologies. Whether less than, equal to, or greater than current technologies, the scale at which they are being built is unbridled and alarming. See:

- Zuckerberg says Meta will build data center the size of Manhattan in latest AI push

- These Data Centers Are Getting Really, Really Big

- AI Needs More Abundant Power Supplies to Keep Driving Economic Growth

The effects on education, learning, mental health, or within other social ecosystems. See:

Digital colonialism and exploitation of the Majority World/Global South. See:

The usage of AI technologies in health care, government, police, and military—already creating massive risk and having deadly consequences. See:

Usefulness

I didn't spend any time talking about whether these tools are useful or not. In the engineering space, I think the verdict is still out.

I have several very respected friends who are using the technology and finding optimizations in their workflows. They are able to build things that they otherwise would not have had time for.

Though I also know other very respected friends who have tried the technology and found it wanting for a lot of reasons outside of the social implications listed above.

I glossed over this during the talk, but one thing I wonder about is, what is the purpose of using these tools?

Is it to make our lives easier? Is it to ease our burden at work? To automate the things that annoy us? Is it to increase productivity? Are we attempting to provide more value to our employers? To the shareholders?

And even if we have answers to all those questions, what is the cost of enabling all those things? And I'm not talking about the monetary cost, but the social cost to society at large.

Summary

From the talk:

My focus here was to unveil the stated goals of those who are leading the charge with these technologies and making decisions that have real-life consequences in the here and now. It is important to understand their goals and motivations. It is important to understand their vision.

Left unchecked, these paragons of delusion will gladly sacrifice us today for their hallucinations of tomorrow.

Our best bet is to band together, and through collective action, demand transparency, regulation, and accountability...

I know all of that is easier said than done, but we have to start somewhere.

Because if the future we have to look forward to is the one seen through the eyes of venture capitalist and eugenicist billionaires, then they might as well just go ahead and eat me.