The LLM Ate My Baby

And Other Horrors

The abyss that exists between factions of LLM users and abolitionists stems from differing perspectives on the harms produced by the industry.

I refuse to imagine that the majority of LLM users are so enamored with this technology that they purposefully ignore the possibility that their prompts are accelerating the destruction of humanity as we know it.

The offset of harms and benefits must somehow be worth it.

Either the benefits afforded are substantially better than an alternate timeline without these tools, or the harms are overstated and merely collateral damage of inevitable progress.

Or something in between.

The Absurd

If it could be proven, without any doubt, that for each prompt to an LLM, a human child must be sacrificed into the jaws of a hungry datacenter in order to extract the child's essence, I believe that most people would never prompt again.

(It is sad that we live in a world where "most" is the best we can do.)

Maybe somewhere in between, users might think to themselves, "Sure, one child will be sacrificed, but my prompt will save ten children's lives in return!"

Pardon me.

I'm trying to balance this absurd math equation (sacrificial child < 10 children saved) in order to better understand the abyss.

Unfortunately, in real life, the composition of that equation is heavily obfuscated, making it harder to do the math.

If there were a clear and overwhelming consensus as to how our actions affect the world around us, would we make different choices?

We may never know, as sadly, we live in a world of obfuscation.

WMD

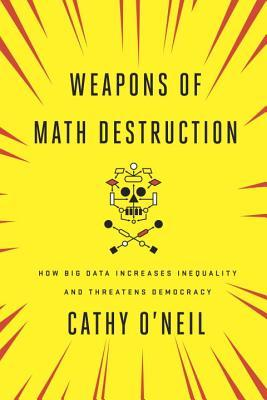

Cathy O'Neil's book, Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy was released a decade ago.

In the book, O'Neil exposes how big data is used in fields like insurance, advertising, education, or law enforcement and how resulting algorithms are then applied to exploit, manipulate, and harm the disenfranchised—particularly because the underlying data amplifies inequality, racism, sexism, and other harmful vectors.

Over a decade ago, the public was told that these complex algorithms were impartial. You know, because computers do not show prejudice.

But instead, what was happening is that "lucky" people became luckier, and "unlucky" people became unluckier.

The companies loved the algorithms because it made it very difficult to hold anyone accountable. After all, mathematics is neutral. (Even if big data is not.)

If a person was denied a loan, it wasn't because someone discriminated against them, it was the Algorithm.

If a person was classified as having a high risk of relapsing into criminal behavior, they might get a longer prison sentence. No human was responsible for the decision.

It was the Algorithm.

Opaque

It makes perfect sense why corporations want to be opaque.

Firstly, it makes it difficult for independent scrutiny on how their business practices affect the general population, allowing them to launch questionable products without any friction.

Secondly, it makes it nearly impossible to regulate, enforce, and hold them accountable, in spite of any tangible evidence of malfeasance.

Which brings me back to how I opened.

We don't have any way of knowing if the AI companies are throwing babies on an altar to the god of AGI. They're probably not doing that, but even if they were, they wouldn't tell us about it.

But similar to what these companies were doing a decade ago with the Algorithm, they are again blindsiding the public by hiding the risks of depending on biased data.

They are also hiding the vast network of surveillance which indeed is being fed to their god of AGI.

A decade ago, the public took the Algorithm for granted.

The companies profited from the harm to already marginalized communities, and the public's lack of skepticism about the effects of the Algorithm put us all on the backfoot.

Strides were made to inform the public and to course correct. But before long, the Algorithm was pushed aside by the all-encompassing, productivity-enhancing AI.

As before, being skeptical (or being situationally aware, if you will) about the motives of profit-driven corporations is, in itself, a public good.

Diffused

Cathy O'Neil recently hosted Şerife Wong on the AI Skeptics podcast.

On that episode, Wong recounts the history of Stable Diffusion and how it was originally discussed on Reddit prior to its release.

Many concerns were raised against releasing it to the public.

After all, if anyone could use it, without any rules, awful things could happen, up to and including CSAM (child sexual abuse material).

It was released despite these warnings.

The result was predictably terrible. Many racist images proliferated the web, as well as the aforementioned CSAM, and pornography, a large portion that included acts of violence and abuse toward Asian women.

And nothing happened.

No one was held accountable.

Soon after, OpenAI released DALL-E.

The executives at these AI companies know that policy makers are years behind on understanding this technology, and therefore any meaningful regulation is non-existent.

Any attempt at mitigating these risks through any kind of regulation could be many years away.

Suspicious Face

In the years after 9/11, I mused about how I was often "randomly selected" to be searched at airports, almost without fail.

Although I was born in Honduras, my college friends would sometimes call me "Osama" for what I presume exposes the biases of the time. Apparently, the TSA agents thought the same.

While writing this article, a friend sent me this article on an alleged leak of data over at Persona, the Peter Thiel funded, identity-verifying app.

"You hand over your passport to use a chatbot, and somewhere in a datacenter in Iowa, a facial recognition algorithm is checking whether you look like a politically exposed person. Your selfie gets a similarity score. Your name hits a watchlist. A cron job re-screens you every few weeks just to make sure you haven’t become a terrorist since the last time you asked GPT to write a cover letter,” the report alleges.

It may be that data is now resurfacing the same biases that the TSA agents (or college friends) held 20 years ago. Reportedly, the algorithm "checks for whether a face looks 'suspicious'."

But this is far more sinister.

From the same article:

However, deeper concerns arise about the source of the data. When users submit their IDs and selfies for verification on popular platforms, the data likely ends up being analyzed and resold for many other purposes.

There is no world where this is a good thing.

When tech companies announce new features, they hope for more access to our data. And more access to our data means more possibility for surveillance and privacy rights violations.

But It's A Tool

Up to this point, I've still been dancing around the premise that I started on.

That is to say, there are bad things happening all around us, but how do they relate specifically to the tools we use?

A little more precisely, my main point up to now is that regardless of what one thinks about the technology itself, the underlying data that feeds these systems is extremely problematic.

Even with all the amounts of reinforcement learning thrown at these models (and the ethical dilemmas that brings up), the data problem is not going away.

In fact, it can only get worse.

These companies aren't selling you tools to make your life better, more productive, or more efficient because they care about you.

In fact, they're barely selling anything at all. What they want is access to your information. Your email. Your calendar. Your photos. Your contacts.

Data.

And without proper oversight, their modus operandi seems to be along the lines of "just trust us."

For now, lets pretend the data problem is not a problem.

In other words, some LLM users may be aware of the inherent issues with model composition, but feel that responsible usage of the tools counteracts the negative externalities.

Tools are neutral.

They're apolitical.

Afterall, someone can use a spreadsheet to balance their budget or to commit financial crimes, right?

I don't agree that tools are apolitical, but I won't make that argument here.

However, tools can be designed badly, with deadly consequences.

A glass ketchup bottle is bad design. A password field that disallows copy/paste is bad design. Lead-based paint. A car without handles. A large boat with only enough lifeboats to accommodate half the passengers.

By Design

But why do I think LLM-based tools are badly designed?

They clearly work in the hands of skilled users.

They reduce time and effort it would take to accomplish certain tasks that seemed unsurmountable before.

Allow me a small, somewhat unrelated tangent...

Over 15 years ago, there was a popular belief that providing micro-loans to individuals (usually women) in poverty-stricken areas was a way to make the most positive impact in those regions.

There were success stories about individuals that were able to use the money to start a business, which then led to financial sustenance for the individual and their family.

Around that time, a book came out called More Than Good Intentions: How a New Economics Is Helping to Solve Global Poverty which challenged that notion.

A researcher and economist teamed up to study this program at the ground level for a couple of years.

Stories of success were actually far less common than reported. Additionally, they found that measuring success was extremely difficult and contextual.

For example, if one individual in the community experienced success with the micro-loans program, what effects did that have for the immediate community?

What constituted success in the first place?

Oftentimes, Western-based aid programs made assumptions about what made people happy/successful, and many times, those assumptions were wrong.

On the whole, the micro-loan program wasn't as beneficial to the community as initially thought.

What does that have to do with badly designed tools?

While some users may experience some form of success while using these tools, there are equally damning consequences to other users, as well as negative externalities to the greater community.

There are effects that extend far beyond one person's adoption. (AI slop PRs, security vulnerabilities, delusions, misinformation, CSAM, pornography, deep fakes, scams, etc...)

In addition, the cost for using these tools isn't $8/month, or $20/month, or even $200/month. It is that plus everything it devours in order to get better, and better, and better.

And better?

Long Term

I've already made the case that the leaders of these technologies subscribe to an ideology with disturbing echoes of eugenics and a techno-optimist dystopia.

The history of Silicon Valley is no different.

Sure, users of LLM tools could use their new found power of 10x productivity to usher us into a different, better world.

They could create applications (or something else?) that somehow we were unable to make before because we were too slow?

Maybe that will bring about an economic boom, a breakthrough in medicine or science, or empowerment to would-be entrepreneurs who would otherwise be unable to prop up their ideas into successful businesses.

But on the way to that future, we will have ceded so much agency and control to the oligarchs who are envisioning a world of liberal eugenics.

A couple of years ago, Victor Wang wrote a post which was published on Cathy O'Neil's mathbabe blog, which asks the question, "Who get's to live in a techno-utopia?"

Wang describes the feeling of attending a conference where the speaker, Jonathan Anomaly, spoke specifically about liberal eugenics.

This is “liberal” in that no existing people are harmed and there is no obvious coercion. Instead, voluntary genetic selection and enhancement is mediated via technological advancements. This is analogous to existing practices in genetic screenings of embryos. For instance, parents today have the choice to abort a fetus if certain genetic conditions such as Down syndrome are detected.

Wang describes their feeling at the end of the talk. No one challenged the speaker's assertions.

No one pushed back.

Walking out of the talk, I overheard a young attendee casually mention “wow I had no idea technology was so advanced now”. As someone who lives with a disability (and likely candidate for embryonic annihilation), it is a surreal experience to be in a room full of people who believe that they would like to do good in the world and at the same time be a person who they would prefer not to exist at all.

Fend For Yourselves

The techno-utopia that the oligarchs are envisioning is one that is exclusionary by nature.

These companies will steamroll over anything and anyone that stands in their way toward their end goal.

If a community pushes back on a disruptive datacenter, they will just move on to the next town to exploit.

Or worse yet, they'll just employ the help of law enforcement to arrest you if you speak out against them for a few seconds too long.

In the meantime, while we are fending for ourselves, the tech companies have funded lobbying groups with billions of dollars.

These lobbying groups have ties to "longtermism," the kind of effective altruists that push for a specific type of AI Policy focused on a specific kind of techno utopia.

[Edit: I may write about Longtermism more in depth at a later date (maybe). But note that the way I'm using it here is in how the tech oligarchy has adopted the term/philosophy for a future that looks very much like a result of liberal eugenics.]

These goals, shrouded under the veil of "AI Safety," are a form of coercion, as they ultimately seek to control the narrative of how these technologies will affect the world.

They are defining the equation of who is worth saving and who is worth sacrificing.

They are deciding what our future looks like.

That absurd equation looks like this: billionaires > undesirables.

I don't believe that we are anywhere near closing the abyss.

The arguments have been laid out, and we all choose how we defer our judgement about how these technologies are affecting the world.

But even if you believe that the tools are not problematic on their own, it is clear that these companies are notoriously unhinged.

Just a few days ago, I learned of Apple's claim that they own their employees' cervical mucus. (I happen to believe their research was for devices meant to harvest even more and more of our personal data.)

On Wednesday, Mark Zuckerberg was testifying in LA about whether his company built an app meant to "addict the brains of children."

It is distressing to see some of the evidence provided for this case. Internal documents show employees describing themselves as drug dealers, writing "we're basically pushers."

I don't even feel like mentioning the clusterfuck involving Ars Technica and the OpenClaw rogue agent making up shit about the matplotlib maintainer.

Was anyone held accountable?

Of course not. But the creator of OpenClaw works at OpenAI now, in case that's reassuring to you....

We're bound to continue arguing about whether these tools work, or whether the planet will burn at a quicker rate, or whether these companies can/should be held liable for harms to users of their products.

But even in a world where everything seems absurd—the cost equation shouldn't be this hard to balance.